The presence of bots on the internet is nothing new, but their increasing sophistication makes them harder to detect and block. More than 40% of all internet traffic comes from automated bots, with a significant percentage being malicious. Attackers use bots for various purposes, from content scraping and credential stuffing to launching large-scale DDoS (Distributed Denial-of-Service) attacks.

For businesses, bot traffic can become an expensive burden. Excessive requests from bots slow down websites, overload servers, and manipulate analytics, making it difficult to track real user behavior. In eCommerce, fraudulent bot traffic can cause cart abandonment issues, distort conversion rates, and negatively impact ad spending.

Website security professionals need to differentiate between legitimate and harmful bot traffic to ensure smooth operations. Good bots, such as search engine crawlers, uptime monitoring tools, and AI chatbots, are essential for the online ecosystem. However, bad bots can cause irreversible damage if left unchecked.

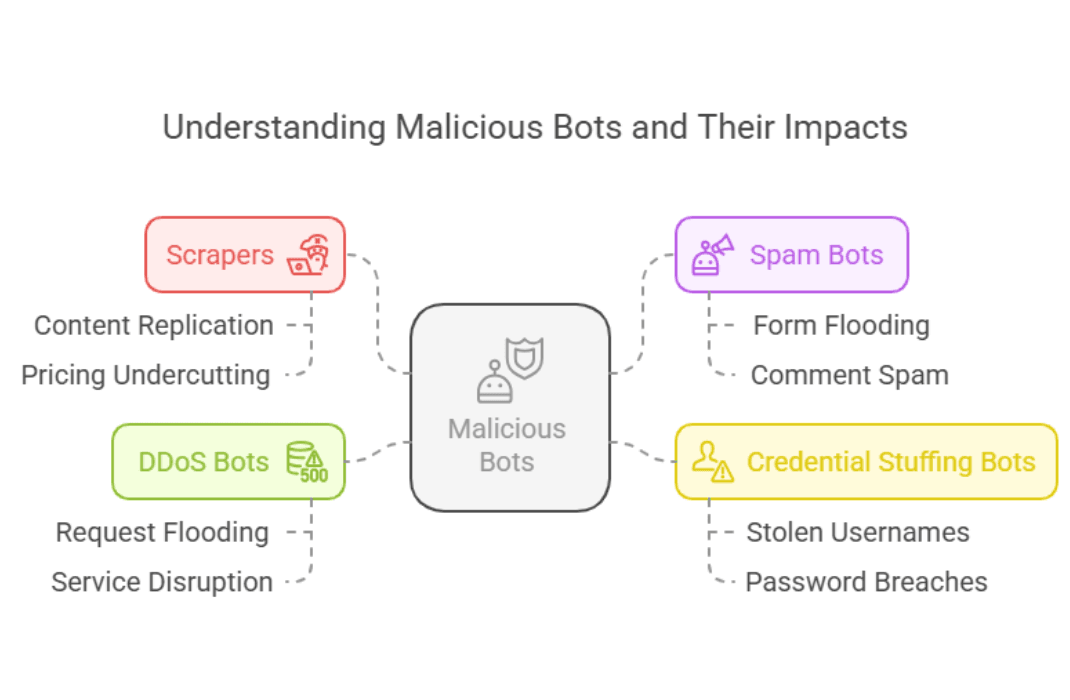

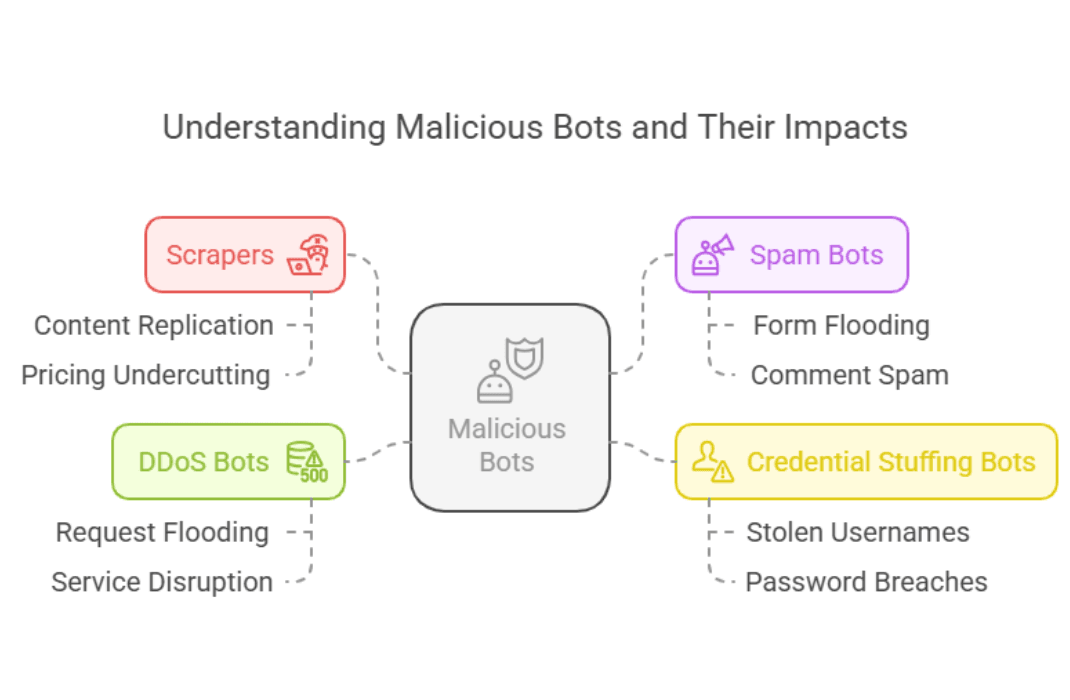

Types of malicious bots that threaten websites

Different bots serve different purposes, but the most harmful ones often operate in the shadows, going unnoticed until significant damage is done. The most common types of malicious bot traffic include:

- Scrapers – Bots that steal website content, pricing data, and other valuable information. Competitors use these bots to replicate content or undercut pricing strategies.

- Spam Bots – Automated scripts that flood forms, comment sections, and reviews with spam links, degrading user experience and credibility.

- Credential Stuffing Bots – Bots that attempt to gain unauthorized access by using stolen usernames and passwords from previous data breaches.

- DDoS Bots – These bots generate a massive number of requests, overloading the server and rendering the website unavailable for real users.

Websites that fail to monitor and mitigate these threats often face unexpected traffic spikes, slow page loads, and frequent server crashes.